Complete Guide to A/B Testing for Email Campaigns

As a marketer, you know the importance of email campaigns that grab attention and drive results. More opens, higher click-through rates, and, of course, increased sales; this is the goal, right? But how can you consistently hit these targets? One highly effective method to boost your email campaign performance is through A/B testing. By running tests on different versions of your emails, you can uncover what truly resonates with your audience and make data-driven decisions that improve engagement.

In this guide, we’ll walk you through the ins and outs of A/B testing for email campaigns. We’ll explain what it is, why it’s essential, the best practices to follow, and how artificial intelligence (AI) is changing the game. Let’s see how you can start using A/B testing to refine your email marketing strategy and boost your results.

What is A/B Testing in Email Marketing?

A/B testing is the process of comparing two versions of a campaign to see which one performs better. In the context of email marketing, it involves sending one variation of your email to one subset of your subscribers and another variation to a different subset. The goal? To figure out which version of your email results in higher engagement, be it through open rates, click-through rates, or conversions.

A/B testing can be as simple as tweaking the subject line or as advanced as testing entire email templates. For example, you might test two different subject lines to see which one gets more opens, or you could experiment with two distinct email designs to see which one drives more clicks.

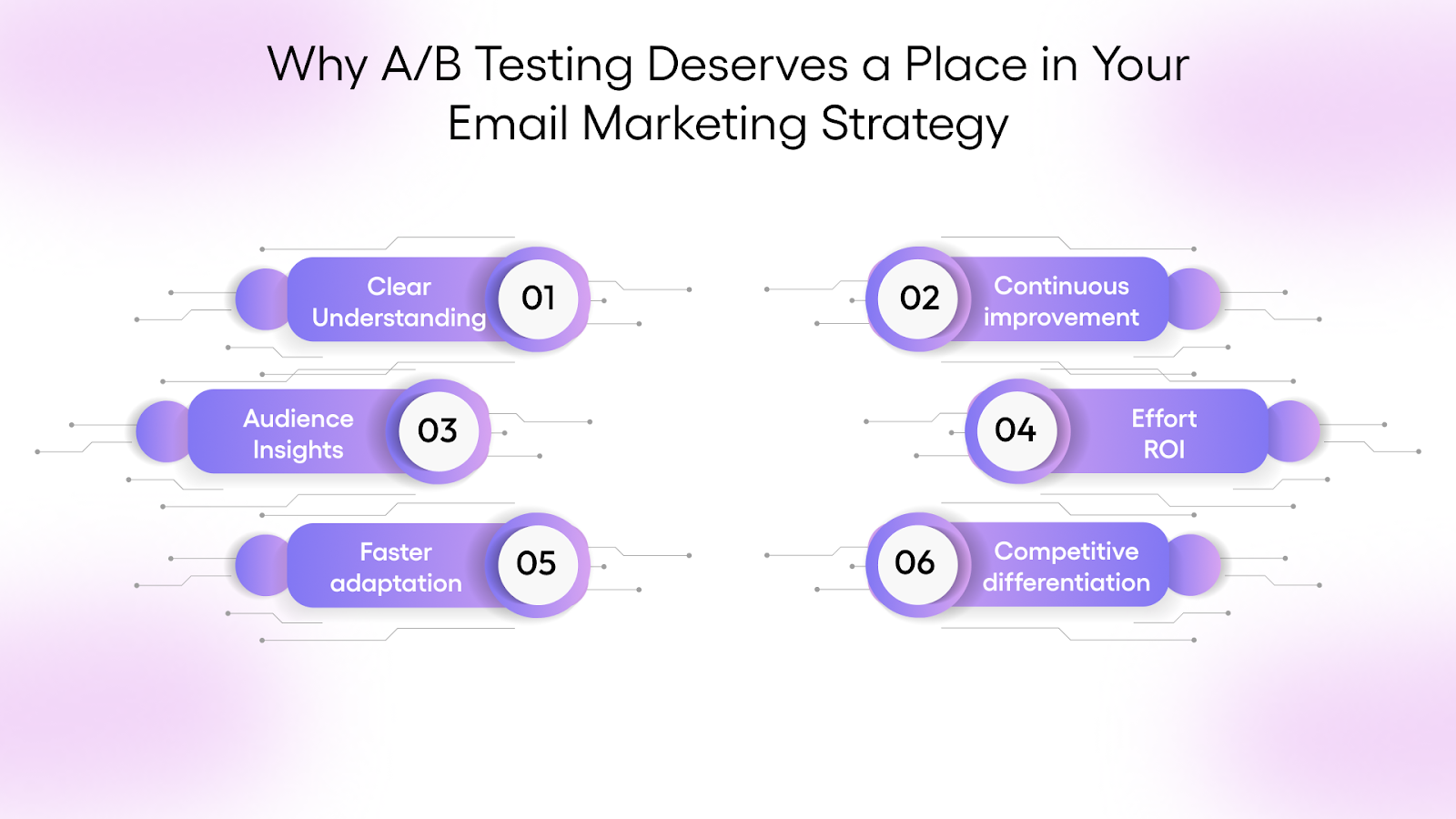

Why A/B Testing Deserves a Place in Your Email Marketing Strategy

In today’s crowded digital landscape, guessing what will resonate with your audience is no longer a viable approach. A/B testing allows marketers to make informed decisions based on how recipients actually respond, not assumptions or general trends. It’s a method grounded in observation and evidence, offering consistent opportunities for refinement.

Here’s why it matters:

- Clarity over assumptions: A/B testing enables you to understand what elements of your email, from subject lines to CTA placement, influence engagement. Instead of relying on generic best practices, you gain insights specific to your audience.

- Continuous improvement: Small, incremental changes can produce measurable results over time. A marginal lift in open rates today could translate to a significant increase in conversions across future campaigns.

- Audience-specific insights: No two subscriber lists are the same. What performs well for one audience may fail to gain traction with another. A/B testing helps identify preferences unique to your list, such as tone, design choices, or sending times.

- Greater return on effort: Testing ensures you are maximizing the impact of each campaign. Rather than increasing frequency or list size, you’re improving the effectiveness of what’s already in place.

- Faster adaptation: In a changing environment, A/B testing provides a structured way to respond quickly. If performance drops or audience behavior shifts, testing helps you adapt without having to overhaul your entire strategy.

- Competitive differentiation: Many marketers skip testing due to time constraints or resource limitations. Incorporating it into your process gives you a strategic advantage by continuously refining your approach, even if others do not.

Ultimately, A/B testing is not a one-time optimization tactic; it’s a long-term discipline. When applied consistently, it elevates campaign performance and deepens your understanding of what drives meaningful engagement within your audience.

For teams looking to go a step further, platforms like TLM offer more than just analytics. With built-in campaign management and integrated lead tracking, TLM helps you act on your A/B testing insights faster, turning every learning into a sharper, more personalized customer journey.

Read: The Best Time to Send Marketing Emails in 2025

What You Should Actually Be Testing in Your Email Campaigns

Most blogs will tell you to test subject lines, CTAs, and images, and while those basics matter, they barely scratch the surface. If you're serious about improving email performance, it's time to go deeper. Here are a few elements worth testing, not just because they’re testable, but because they can uncover insights that genuinely change how you approach email.

- Subject Lines That Reflect Real Human Behavior

Instead of simply testing length or emoji use, ask: What emotion or mental trigger are you tapping into?

- Curiosity vs. Clarity: A subject like “You’re not going to want to miss this” may drive curiosity clicks, while “25% off ends today” is direct and urgency-driven.

- Personal relevance: Test adding contextual elements, location, product viewed, or industry, to see if familiarity outperforms generalization.

The goal isn’t just more opens, it’s getting the right people to open with intent.

- Sender Name: Who Do They Want to Hear From?

It’s easy to overlook, but the sender name alone can influence open rates by 20% or more. Try:

- Business Name vs. Humanized Sender: “Company Name” vs. “Alex from the Team”. Adding a personal touch to the sender name can increase open rates by making the email feel more relatable, as if it's coming from a real person rather than a faceless brand. Test both to see which builds more trust with your audience.

- Consistency vs. Variation: Maintain the same sender or adjust it based on the campaign type (e.g., promotions vs. onboarding).

This isn’t about tricks, it’s about clarity and trust. People open emails from names they recognize or associate with relevance.

Read: How to Build Effective B2B Email Lists

- Send Timing: Don’t Just “Test Tuesday at Noon”

While it's true that Tuesday at noon is statistically popular, your list might behave differently. Instead of blindly following benchmarks:

- Test windows, not just days: Try sending between 9–11 AM or 4–6 PM, especially for professionals who clear their inboxes early or late.

- Look at behavioral send timing: If someone typically opens your emails late at night, consider triggering your next send around that time.

And yes, Saturday might surprise you. Some brands see their highest open rates when there's less inbox competition on weekends.

- Preview Text: The Most Ignored Real Estate

If you're not testing preview text, you’re missing a chance to add nuance to your subject line.

- Reinforce or contrast: Does a direct subject benefit from a softer preheader? Or vice versa?

- First-person vs. third-person: “Here’s what I think you’ll like” feels more conversational than “Check out this offer.”

Small tests here can quietly lift open rates, especially on mobile, where preview text is more visible than ever.

Read: Proven Ways to Increase Your Email Marketing ROI

- Email Layout & Flow: How Easy Is It to Scan?

A beautifully written email won’t be effective if it’s difficult to read. Beyond testing visuals:

- CTA placement: Top? Middle? End? Try variations. Some audiences decide early, others need context.

- Scroll testing: Are people even getting to your CTA? If not, maybe your intro is too long or visually heavy.

You’re not just testing for clicks, you’re testing for clarity.

- One More That’s Rarely Talked About:

The fallback CTA, a second, “softer” action for people not ready to convert.

- For example, If your main CTA is “Book a Demo,” test adding a subtle link like “Learn more” or “Read our case study.”

- This gives you a chance to retain mid-funnel interest, rather than losing the click entirely.

If all your A/B tests are surface-level, don’t expect deep results. Instead of endlessly tweaking buttons and colors, test elements that reflect how real people read, click, hesitate, or trust. That’s where meaningful optimization lives.

Read: What are the Abstract Marketing Ideas for B2B Lead Generation?

Best Practices for Effective A/B Testing

A/B testing can significantly boost your email marketing efforts, but to see meaningful results, it’s crucial to implement it correctly. Here are some best practices that can help you make the most of your A/B tests:

Identify Clear Goals

Before you dive into A/B testing, it’s important to define what you’re hoping to achieve. Are you looking to increase open rates? Boost click-through rates? Or drive more conversions? By setting specific goals, you’ll know exactly what to focus on, allowing you to prioritize changes that will have the greatest impact. For example, if your goal is to increase conversions, you might want to test the placement or wording of your CTA.

Test One Variable at a Time

One of the keys to successful A/B testing is simplicity. Testing one variable at a time helps you isolate the factors that influence your email’s performance. If you change more than one variable, say, the subject line, images, and CTA all at once, you won’t be able to pinpoint which change made the difference. For clarity, test different subject lines in one campaign, and only once you've determined which one works best, move on to testing another element, such as images or copy.

Define Your Audience Properly

Effective A/B testing begins with understanding who you’re targeting. Instead of applying the same test across your entire list, segment your audience by behavior, lifecycle stage, or engagement level.

A subject line that resonates with long-time customers may not appeal to new subscribers. Likewise, timing, tone, and offers might need to shift depending on purchase history or interaction frequency.

For example, try testing different messaging for leads who’ve shown interest in your product but haven’t converted, versus those actively engaging with your content.

These nuanced insights help you move from broad assumptions to precise, data-backed decisions, turning your email strategy into something far more targeted and effective.

If your campaigns demand that level of segmentation and lead intelligence, The Lead Market (TLM) helps you tailor each campaign to specific buyer groups, making your outreach more relevant, timely, and more likely to convert.

Ready to transform how you generate and manage leads? Discover how TLM can help you focus on what matters most, turning the right contacts into real opportunities.

Consider External Factors

External variables, such as the time of day, the device used (mobile vs. desktop), or even email client differences, can impact your results. For example, sending an email at a different time could lead to better engagement based on your audience's habits. Similarly, mobile users might have different preferences from desktop users. Always factor these into your analysis to ensure factors beyond your control do not skew your results.

By following these best practices, you’ll set up a solid foundation for your A/B tests. However, there are a few challenges that might come along the way.

M&A Advisory Gains Significant Leads with TLM's B2B Email Campaign

A California-based M&A advisory firm, specializing in brokering deals between buying and selling companies in the managed service provider (MSP) sector, faced challenges in generating qualified leads.

With a higher demand from buyers than sellers and difficulties in targeting the right MSPs, the client struggled to meet their lead generation goals. Their sales efforts through cold calling had limited success.

TLM stepped in with a tailored B2B email marketing campaign, focusing on mid-sized MSPs with 20-150 employees. Using a three-touchpoint approach, we worked closely with the client to book appointments for the owner's calendar.

The campaign achieved immediate success, generating its first lead within the first month. By the second month, the number of leads increased to two to three per month. After optimizing the approach with a two-touchpoint strategy, we generated around 13 inquiries. The client was pleased with the results, noting that we exceeded their expectations.

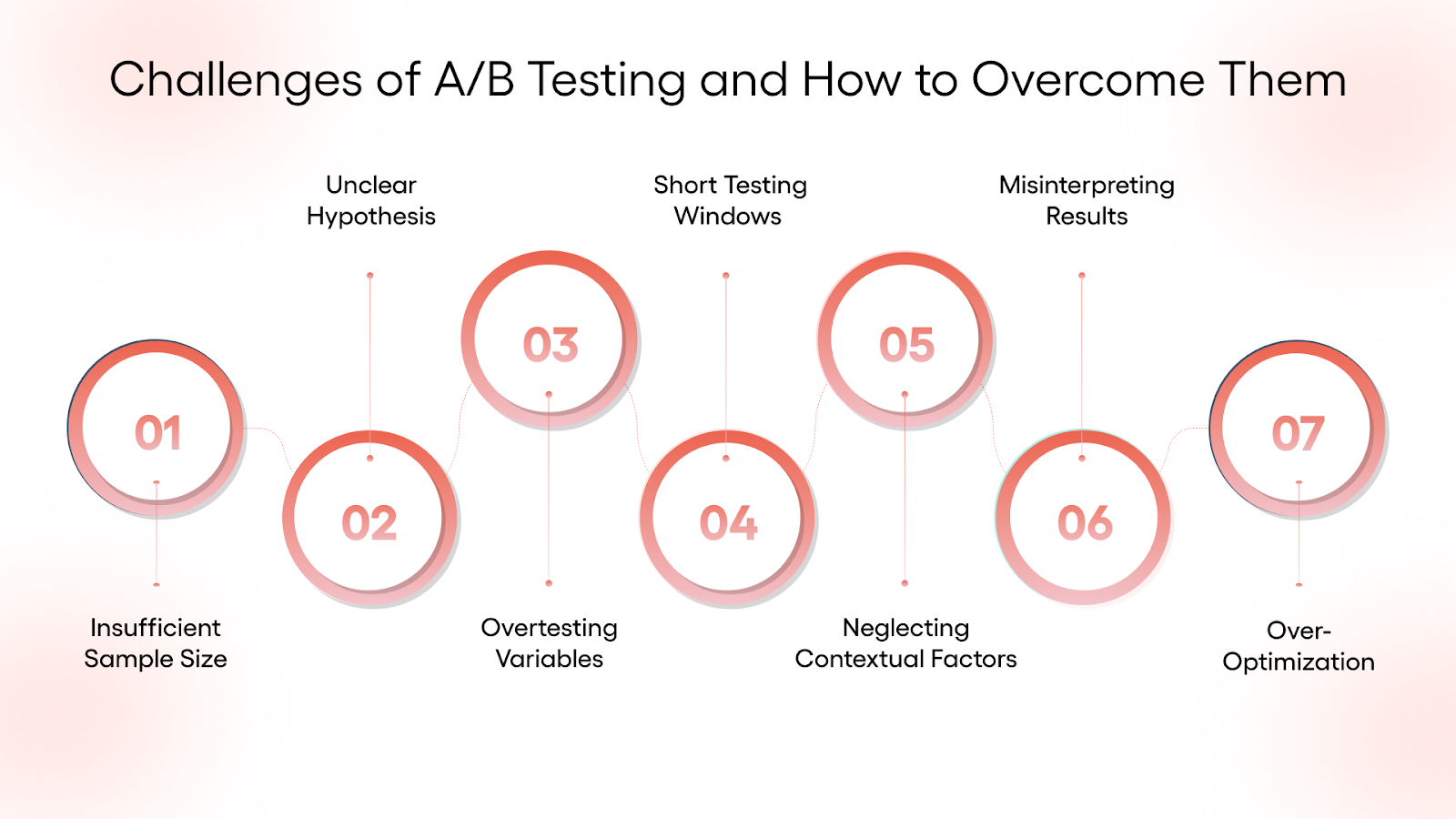

Challenges of A/B Testing and How to Overcome Them

While A/B testing can sharpen your email campaigns, it’s not without obstacles. To get truly useful results, you’ll need to navigate these common (and often overlooked) challenges:

1. Insufficient Sample Size

One of the most frequent pitfalls. Running a test with too few recipients can produce misleading results that don’t hold up at scale. To avoid this, calculate your minimum required sample size using statistical tools or built-in ESP calculators. Don’t stop the test too early; wait until you reach statistical significance before drawing conclusions.

2. Unclear Hypothesis

Testing without a clear “why” often leads to random experiments with no actionable outcomes. Always start with a hypothesis. For instance, instead of “Let’s test two CTAs,” frame it as: “We believe a value-based CTA (‘Get my guide’) will outperform a generic one (‘Click here’) because it’s more specific.”

3. Testing Too Many Variables at Once

It’s tempting to test multiple changes, a new subject line, new visuals, and a new CTA, but this muddles your results. If one version performs better, you won’t know why. Stick to one variable at a time unless you’re using multivariate testing (which requires far larger audiences).

4. Short Testing Windows

Running a test for just a few hours can skew results, especially if your audience opens emails at different times. Let your test run for at least 24–48 hours or longer, depending on your send size and engagement patterns, to capture accurate behavior.

5. Neglecting Contextual Factors

Even the best A/B test can fail if external variables aren't considered; seasonality, industry news, and even time zone differences can affect behavior. Avoid comparing results across radically different timeframes or campaign types.

6. Misinterpreting Results

Just because variation B “wins” doesn’t mean it's always better. Look deeper: did it win by a meaningful margin? Was the increase worth implementing at scale? Always review statistical significance and confidence intervals before rolling out a change.

7. Over-Optimization

Testing and tweaking might make emails feel overly engineered. Sometimes, especially with brand-led or emotionally driven campaigns, sticking to a consistent tone or look matters more than tiny performance lifts. Don’t lose sight of the bigger picture.

A/B testing isn’t just about picking the “better” version; it’s about learning what truly connects with your audience. With the right approach and a bit of patience, the insights you gain can shape smarter, more confident marketing decisions.

Read: 5 Email Types That Should Never Be Automated and Require a Personal Touch

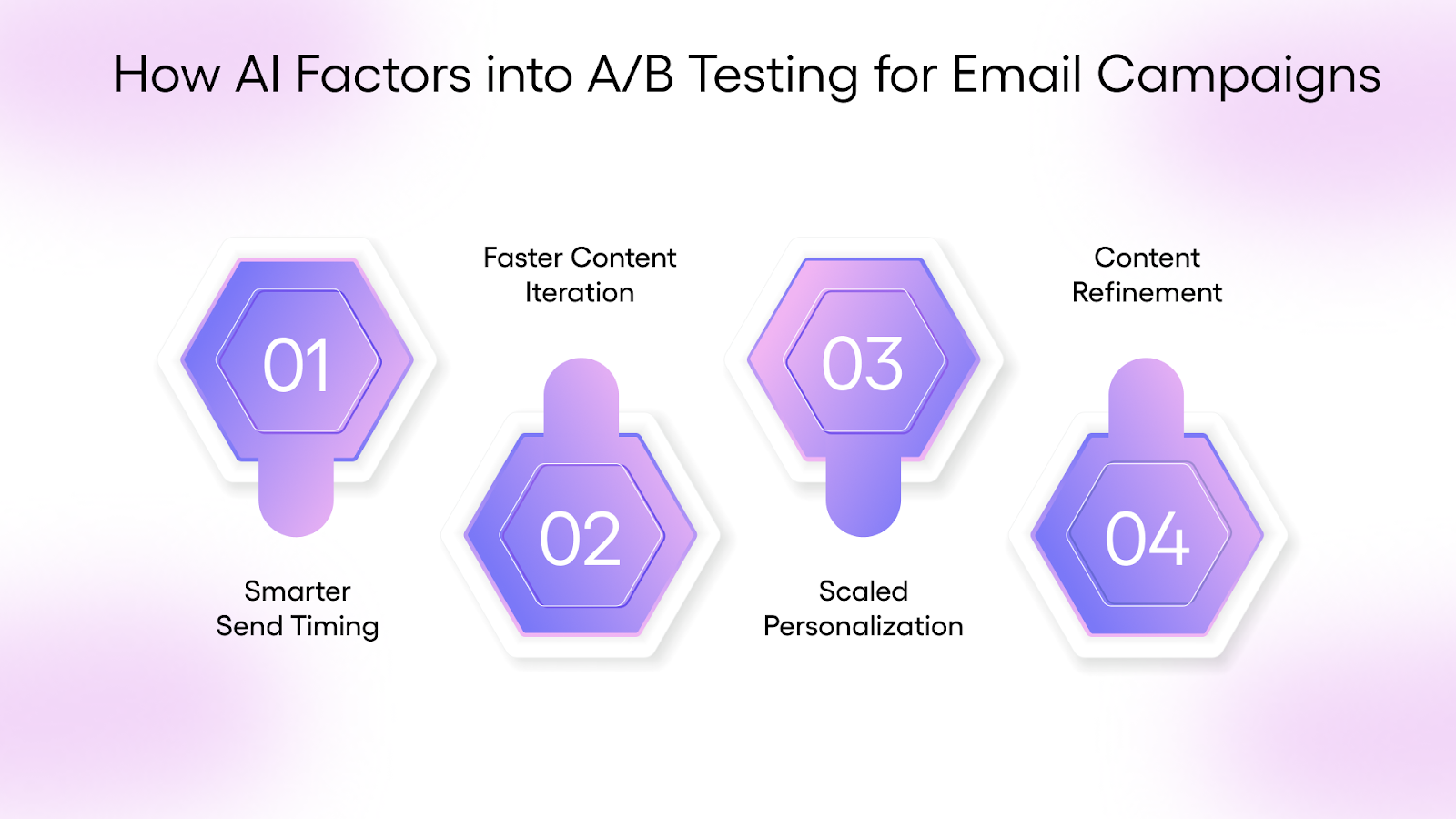

How AI Factors into A/B Testing for Email Campaigns

AI has quietly changed how many marketers approach A/B testing, not by replacing thoughtful strategy, but by offering tools to test and iterate more efficiently. Here’s where it can be useful, especially when used with intention:

- Smarter Send Timing

Predictive models can analyze engagement history to recommend send times tailored to when recipients are most likely to open emails. Rather than relying on general benchmarks or guessing, AI helps align with actual user behavior. - Faster Content Iteration

Generative tools make it easier to explore variations, subject lines, CTAs, and even short-form content, without starting from scratch each time. This doesn’t replace creative thinking but can support quicker cycles of testing and refinement. - Segmentation and Personalization at Scale

AI can support deeper segmentation by identifying behavioral or demographic patterns. Done right, this makes personalization more targeted and manageable, especially for larger lists. But human oversight is still key; data can suggest what to say, but how you say it still needs a brand's voice and understanding. - Content Review and Optimization

Some platforms now offer AI-driven feedback on tone, length, or clarity. These are helpful starting points, but shouldn’t override judgment or context. A well-crafted message that understands the reader’s priorities will always outperform one that’s purely machine-tuned.

The takeaway? AI is a tool, not a substitute for clarity, empathy, or relevance. Used thoughtfully, it can support A/B testing workflows. But the heart of high-performing email remains the same: understanding your audience and communicating in ways that genuinely resonate.

Read: Manual Marketing Work Still Matters, Even When You Use Automation Tools

Boost B2B Lead Generation with TLM’s Strategic Email Campaigns

A/B testing reveals what works, but applying those insights at scale requires a partner who understands the nuances of B2B outreach. At TLM, we don’t stop at clicks or opens. We help you take what you’ve learned and translate it into personalized, high-performing campaigns that align with your audience, your goals, and your growth.

From segment-specific content to appointment-ready leads, our team brings structure, speed, and strategy to your email marketing. So you’re not just sending better emails, you’re starting better conversations with the right people.

Let’s explore how smarter campaigns can drive stronger results.